News Center

NVIDIA H100 Tensor Core GPU: The Ultimate Powerhouse for AI and Deep Learning

- hugh

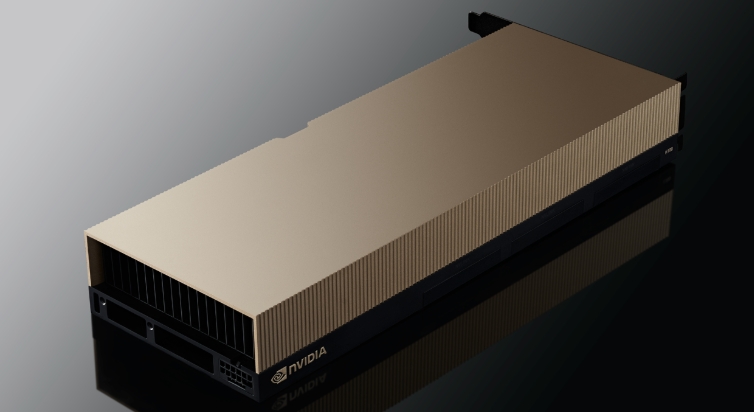

The NVIDIA H100 Tensor Core GPU is one of the most powerful and innovative GPUs designed for artificial intelligence (AI), machine learning (ML), and high-performance computing (HPC) workloads. Built on NVIDIA’s Hopper architecture, the H100 represents the latest leap forward in GPU technology, offering a new level of performance, scalability, and efficiency that is set to power the next generation of AI-driven applications. In this article, we’ll dive deep into the features, capabilities, and potential use cases of the NVIDIA H100 Tensor Core GPU, and explore why it is considered the gold standard for AI workloads.

What is the NVIDIA H100 Tensor Core GPU?

The NVIDIA H100 Tensor Core GPU is a high-performance graphics processing unit engineered to accelerate a broad range of AI tasks, from training large-scale neural networks to performing real-time inference. As part of the Hopper architecture, the H100 delivers dramatic improvements in computational power, energy efficiency, and scalability over its predecessor, the A100 GPU.

The H100 Tensor Core GPU is designed to handle complex AI models and massive datasets that require enormous processing power. Its innovative architecture, along with NVIDIA’s specialized Tensor Cores, enables it to perform AI calculations more efficiently and at a faster rate, making it a crucial piece of hardware for AI researchers, data scientists, and organizations looking to leverage deep learning and other advanced AI techniques.

Key Features of the NVIDIA H100 Tensor Core GPU

- Hopper Architecture The H100 is powered by NVIDIA’s Hopper architecture, which is optimized for AI workloads and designed to scale from small applications to the largest AI models. This architecture enhances performance for deep learning training, model inference, and high-performance computing (HPC) applications. The Hopper architecture is built to enable faster processing, greater energy efficiency, and more effective scaling.

- Tensor Cores for AI and ML Workloads The standout feature of the H100 is its Tensor Cores, which are specialized processing units designed to accelerate tensor operations, the fundamental operations used in deep learning and machine learning algorithms. These cores provide significant boosts in performance by enabling mixed-precision calculations (such as FP16, TF32, and FP64), which are commonly used in AI and ML training.

- Massive Memory and Bandwidth The H100 comes with up to 80 GB of high-bandwidth memory (HBM3), providing the GPU with the memory capacity needed to handle massive datasets and compute-intensive AI tasks. The memory bandwidth of the H100 is also incredibly high, allowing it to process data at a rate that keeps pace with its computational power, ensuring that the GPU remains highly efficient even during large-scale AI model training.

- Multi-Instance GPU (MIG) Technology NVIDIA’s Multi-Instance GPU (MIG) technology allows the H100 to be partitioned into multiple smaller, independent instances, enabling the simultaneous running of multiple AI models or tasks on a single GPU. This allows for greater resource utilization, better cost-efficiency, and improved scalability in cloud environments, where multiple customers or applications may be sharing hardware.

- NVIDIA NVLink and NVSwitch The H100 supports NVIDIA NVLink and NVSwitch, enabling high-speed communication between GPUs in multi-GPU setups. This is crucial for scaling up AI workloads across large clusters of GPUs, which is often required in training state-of-the-art models like GPT-3, BERT, or DALL·E. These technologies improve the scalability and bandwidth of multi-GPU systems, enabling researchers to train models faster and more efficiently.

- Energy Efficiency and Performance Per Watt One of the key benefits of the H100 is its energy efficiency. AI and machine learning tasks are computationally intensive and often require a lot of power to run. With the H100, NVIDIA has optimized the architecture to deliver better performance per watt, ensuring that data centers and cloud platforms can achieve superior performance without consuming excessive power.

Performance Benchmarks: NVIDIA H100 vs. A100 and Other GPUs

The NVIDIA H100 Tensor Core GPU offers substantial performance improvements over its predecessors, including the A100 and V100 GPUs. These improvements are especially evident in AI training, deep learning, and real-time inference.

- H100 vs. A100 The A100 GPU, which was based on the Ampere architecture, was previously one of the most powerful GPUs for AI workloads. However, the H100, built on Hopper architecture, introduces several key advancements:

- Tensor Core Performance: The H100 features next-generation Tensor Cores, improving the speed and efficiency of AI model training and inference.

- Memory and Bandwidth: The H100 has a much higher memory bandwidth (up to HBM3) compared to the A100’s HBM2 memory, enabling faster data throughput for large datasets.

- Energy Efficiency: The H100 delivers superior performance per watt, meaning it can handle more AI tasks while using less energy than the A100.

- H100 vs. V100 The V100 GPU was a major step forward for AI workloads when it was released, but the H100 far outpaces it in terms of both raw computational power and energy efficiency. The V100 relied on Volta architecture, which, while powerful, is now considered outdated in comparison to the H100’s Hopper architecture. The H100 supports faster matrix multiplication, higher memory capacity, and can handle much more complex models.

- H100 vs. Other GPUs (e.g., AMD) While AMD has made significant strides in AI hardware with its Instinct series of GPUs, NVIDIA remains the dominant player in the AI space. The H100 outperforms AMD GPUs in terms of specialized Tensor Cores, scalability, and software ecosystem. The CUDA and cuDNN libraries, combined with TensorFlow and PyTorch, are heavily optimized for NVIDIA hardware, ensuring that the H100 provides the best performance in AI training and deployment.

Use Cases and Applications of the NVIDIA H100 Tensor Core GPU

The NVIDIA H100 Tensor Core GPU is ideal for a variety of AI and machine learning applications, including but not limited to:

- Deep Learning Model Training The H100 is designed to accelerate the training of large-scale neural networks and complex models like transformers, convolutional neural networks (CNNs), and reinforcement learning models. Its massive memory bandwidth and Tensor Core technology allow it to process the immense datasets required for state-of-the-art deep learning.

- Natural Language Processing (NLP) For NLP tasks like language translation, text generation, and speech recognition, the H100 can train large language models such as GPT-3, BERT, or T5. The H100 accelerates the processing of vast text datasets, enabling faster and more accurate NLP applications.

- Computer Vision The H100 can power cutting-edge computer vision models for tasks like image classification, object detection, and medical image analysis. Its Tensor Cores provide the precision needed to train models that detect and classify visual information with high accuracy.

- Autonomous Vehicles Self-driving cars require AI models capable of processing sensor data (e.g., from LiDAR, radar, and cameras) in real-time. The H100’s high computational power makes it ideal for AI applications in autonomous vehicles, ensuring that AI systems can process large volumes of data with low latency.

- AI Inference In addition to training, the H100 excels at performing real-time AI inference, which is essential for applications like personal assistants, robotics, and cloud-based AI services. Its high throughput and low-latency capabilities make it ideal for AI-driven decision-making systems.

Conclusion: The Future of AI with the NVIDIA H100 Tensor Core GPU

The NVIDIA H100 Tensor Core GPU is a game-changer for AI and deep learning applications. With its advanced Hopper architecture, Tensor Cores, massive memory bandwidth, and energy efficiency, the H100 sets a new standard for AI hardware and is poised to drive innovations in fields ranging from healthcare and autonomous systems to natural language processing and high-performance computing.

Whether you’re training the next generation of AI models, powering real-time inference systems, or deploying AI applications at scale, the NVIDIA H100 is the ultimate tool for accelerating the development and deployment of AI technologies.

With the H100, NVIDIA has once again solidified its position as the leader in AI hardware, enabling researchers, engineers, and organizations to push the boundaries of artificial intelligence to new heights. The future of AI is here, and it runs on the NVIDIA H100 Tensor Core GPU.

Share To:

Related Products